Algorithmic Data Privacy, summer semester 24/25

Webpage for a course on data privacy, taught by Pavel Hubáček and Pavel Veselý. Formally, it's under subject Selected Topics in Algorithms II - NTIN111 (please enroll there if you want to get credits).

The lectures will be on Monday from 12:20 in S6 (determined during "Úmluva").

Brief introduction: Datasets containing sensitive personal information could serve as invaluable resources to policy-makers, public institutions, and companies. Yet, their analysis or release could jeopardize the privacy of individuals whose data is contained in such datasets. To tackle this issue, several approaches have been proposed, such as anonymizing the dataset. However, most of the ad hoc attempts at privacy failed spectacularly, which lead to serious leaks of sensitive personal information.

To address all possible attacks at fishing out sensitive information from aggregate statistics, a robust notion of differential privacy has been developed in 2006 by Cynthia Dwork, Frank McSherry, Kobbi Nissim and Adam D. Smith (the authors received the prestigious 2017 Gödel Prize for this work). Since then, differential privacy has received significant attention and made it into the production of companies such as Google and Apple.

This course will describe algorithms for analyzing data in a differentially private way. We will also cover lower bounds on differentially private algorithms as well as the main variants of the model.

The course will be similar (but not identical) to the lectures two years ago.

Prerequisites: Differentially private algorithms are necessarily randomized and their privacy and utility guarantees are analyzed mathematically. We will thus need a certain level of mathematical maturity and a background in probability. We will briefly review all necessary probability tools, most often without proofs, which are cover by other courses.

Lectures

Slides| 17 Feb | P.H.: Intro & some failed attempts at achieving privacy, namely: simple deanonymization and k-anonymity (examples: NYC taxi data, Netflix prize, memoization in neural networks, census data, ...). Reconstruction attacks due to Dinur and Nissim '03 (without a proof). Based on [G] (the first two lectures) and [DR] (Section 8.1). If you missed the first lecture, it is still fine to join the course and come to the second lecture, since we didn't start with differential privacy yet. |

| 24 Feb | P.H.: The randomized response mechanism, definition of pure eps-DP, discussion of the definition and application to randomized response. Laplace distribution, ell_1 sensitivity and the Laplace mechanism achieving eps-DP with proof. Based on [G] (lectures 3 and beginning of 4). |

| 3 Mar | P.V.: applications of the Laplace mechanism on several counting versus histogram queries. Fundamental properties of (pure) differential privacy: post-processing, group privacy, and basic composition (including how to deal with possibly adaptive choice of queries/mechanisms). Approximate differential privacy, that is (epsilon,delta)-DP: definition and a discussion about the choice of delta (the name and shame mechanism). Based on [G] (lecture 4 and part of lecture 5). |

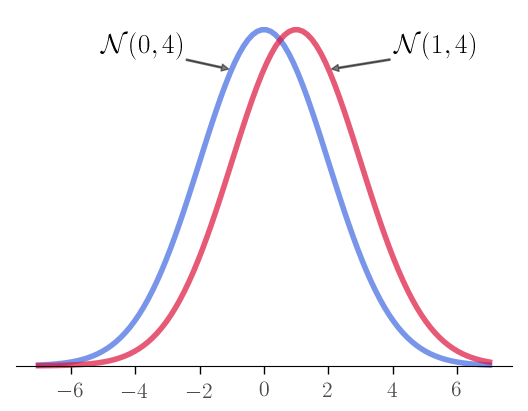

| 10 Mar | P.V.: Gaussian mechanism, including the proof of (epsilon,delta)-DP, based on lecture 5 in [G]. Advanced composition: k-fold adaptive composition and the statement of the theorem (why it gives sqrt(k) dependency). Also distributed papers for presentation (ask for a paper next time if you missed this lecture and would like to present for the credit). |

| 17 Mar | P.V.: Proof of advanced composition (proof based on Section 3.5 of [DR], but instead of KL divergence and Max Divergence, we use the privacy loss random variable). Exponential mechanism (definition and an example on how to use it for single-item auctions, Laplace mechanism is a special case, proofs of privacy and utility guarantees), based on [G] (lecture 7). |

| 24 Mar | P.V.: Sparse vector technique, mainly algorithm AboveThreshold and how to extend it to more "large" queries, based on [G] (a part of lecture 9). Beyond global sensitivity: Local sensitivity and Propose-Test-Release mechanism. Example on finding the most frequent element (mode). |

| 31 Mar | P.H.: private PAC learning and truthful mechanism design using differential privacy, based on [B] (lectures 9 and 10). |

| 7 Apr | P.H.: private PAC learning and truthful mechanism design using differential privacy, based on [B] (lectures 9 and 10). |

| 14 Apr |

Student presentations: Juraj: Adaptive Data Analysis in a Balanced Adversarial Model. Jirka: On Significance of the Least significant Bits For Differential Privacy. |

| 21 Apr | Easter holiday |

| 28 Apr |

Student presentations: David: Shuffle Model of Differential Privacy. Jakub: Better Differentially Private Approximate Histograms and Heavy Hitters using the Misra-Gries Sketch |

| 5 May |

Student presentations: Kristýna: TBD |

| 12 May |

Student presentations: Júlia: presenting Data Reconstruction: When You See It and When You Don't (Departure to Spring School will be just after that.) |

| 19 May | Cancelled: Spring School (arrival will probably be in the afternoon) |

Literature and online resources

- [DR] Cynthia Dwork, Aaron Roth: The Algorithmic Foundations of Differential Privacy (a book about differential privacy from 2014, available online)

- [G] Gautam Kamath: Algorithms for Private Data Analysis - Fall 2022 (lecture at University of Waterloo, includes lecture notes and recordings at YouTube)

- [B] Andrej Bogdanov: Data Privacy - spring 2015 (lecture at The Chinese University of Hong Kong, includes lecture notes)

- [PDP] Joseph P. Near, Chiké Abuah: Programming Differential Privacy (from 2021, freely available in HTML or PDF, with lots of examples and Python code)